Fabian Denoodt

About

I’m a Ph.D student in Artificial Intelligence. My research focuses on making deep learning models more trustworthy and reliable. I want to ensure that the models I train solve the actual problem, not just find shortcuts in the data. To achieve this, I explore different approaches, such as designing neural networks that are easier to interpret, measuring how certain models are in their predictions, and using special layers to guide the model’s output.

Education

-

Ph.D., Artificial Intelligence (Ongoing) -

M.S., Computer Science (Greatest Distinction) -

B.S., Applied Information Technology (Great Distinction)

Work Experience

(1) PhD Candidate @ Eindhoven University of Technology (2025 - Present)

- I am currently pursuing a PhD at the Eindhoven University of Technology, where I research efficient uncertainty quantification in deep learning. My work is supervised by Joaquin Vanschoren and Sibylle Hess.

(2) Visiting Researcher @ the University of Amsterdam (2025)

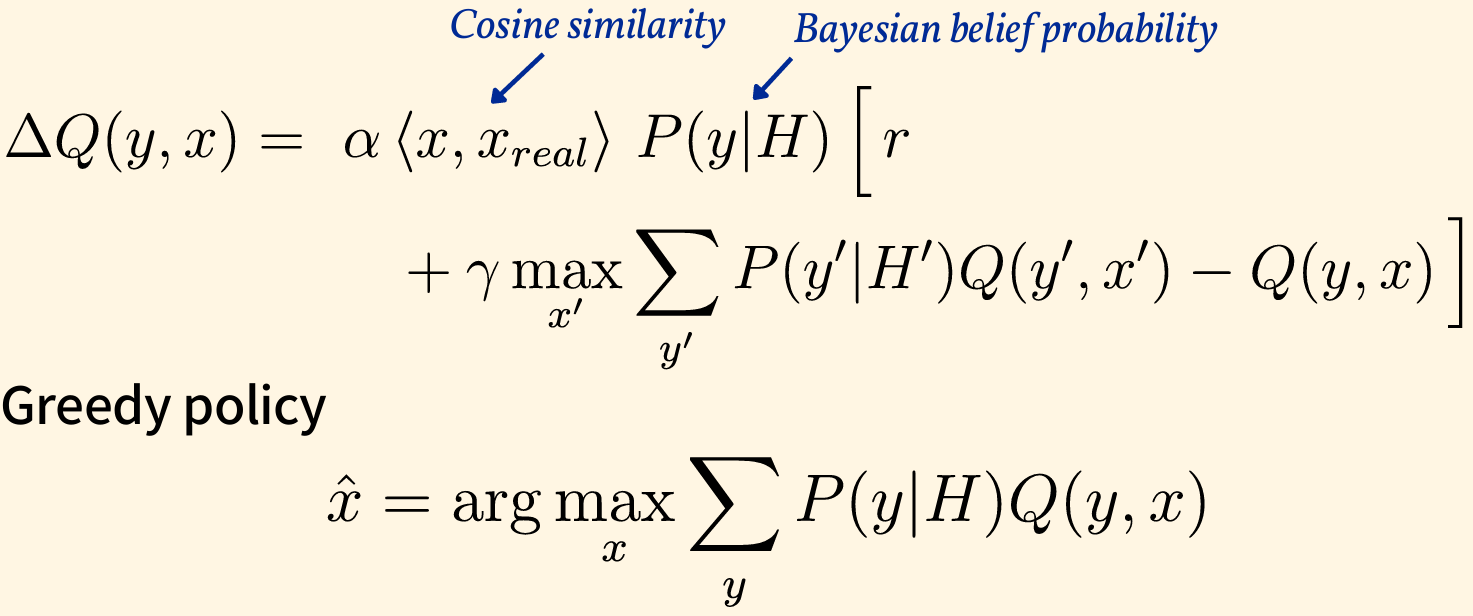

- In 2025, I was awarded a 5-month research grant to join AMLab at the University of Amsterdam. During this visit, supervised by Christian Andersson Naesseth, I investigated dynamic inference-time techniques for Bayesian Neural Networks, focusing on methods that provide any-time confidence intervals.

(3) Teaching Assistant @ the University of Antwerp (2023 - 2025)

- From 2023 to 2025, I worked as a Teaching Assistant at the University of Antwerp. I taught lab sessions for five courses: Artificial Intelligence, Artificial Neural Networks, Numerical Linear Algebra, Advanced Programming in C++, and Distributed Systems.

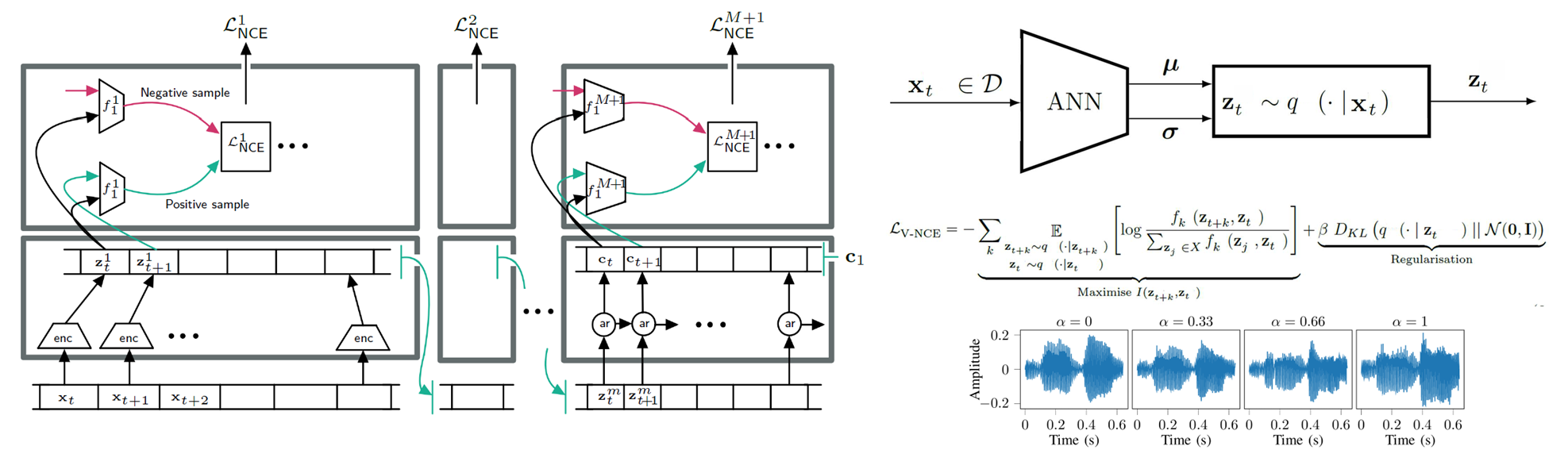

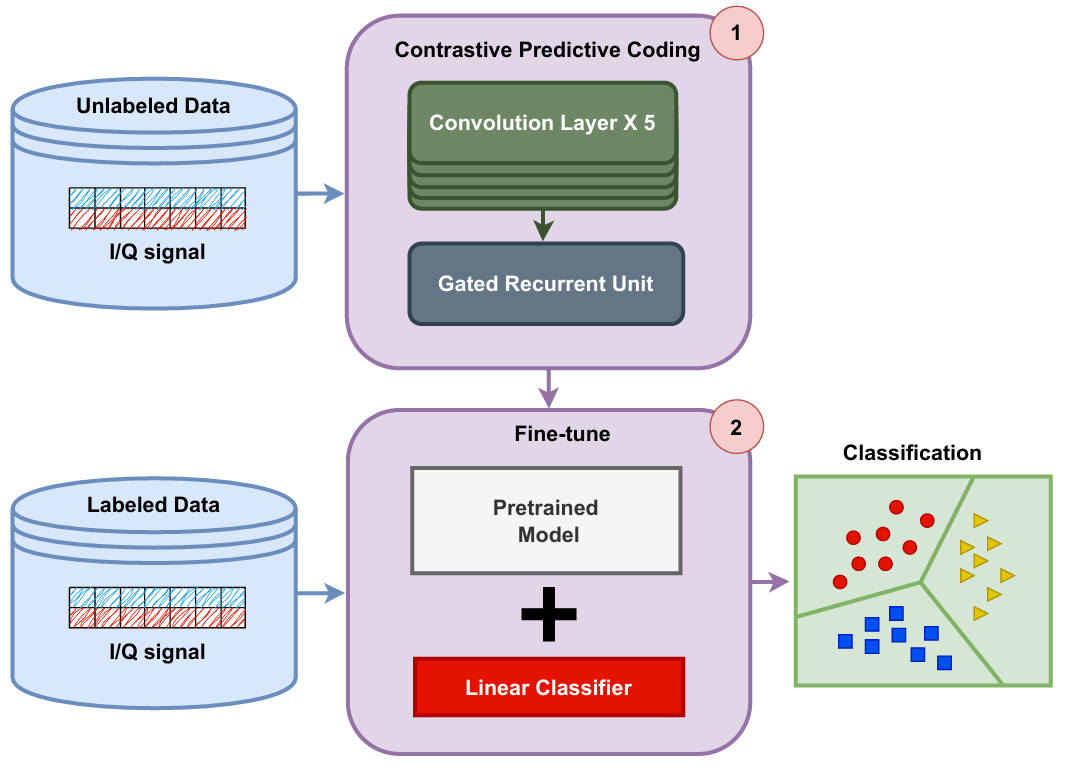

- In parallel, I conducted research on self-supervised representation learning, resulting in two first-author publications: one on applied self-supervised learning and another on interpretable-by-design networks using generative models. I also received a 5-month research grant for a research visit to AMLab at the University of Amsterdam.

(4) Computer Vision Research Engineer @ Puratos (2022, Internship)

- During this internship, I developed a computer vision pipeline to automatically measure bread porosity using conventional image segmentation techniques. A key challenge of the project was detecting bread pores without access to any annotated data.

(5) Data Engineer @ Achmea (the Netherlands) (2020, Internship)

- I created a machine learning pipeline that allows employees to quickly build their own machine-learning models (Similar to AutoML).

- The developed product includes a web application where users can annotate image data and train new models.

- Several evaluation techniques have been implemented to assess the models. When a model does not perform satisfactorily, the web application automatically generates advice on how to improve model performance.

Grade: 16/20 Technologies:

- Machine Learning: Python, TensorFlow, Keras, YOLOv3

- Software development: Angular, .NET CORE, Azure Services (e.g. Azure Databricks, Azure Blob Storage)

Highlighted Projects

(1) Smooth InfoMax - Novel Method for Better-Interpretable-By-Design Neural Networks.

Deep Neural Networks are inherently difficult to interpret, mostly due to the large numbers of neurons to analyze and the disentangled nature of the concepts learned by these neurons. Instead, I propose to solve this through interpretability constraints to the model, allowing for easier post-hoc interpretability.

(2) Image colorization - Paper implementation

-

For a school group assignment, 2 fellow students and I implemented an image colorization model using PyTorch, based on the paper “Colorful Image Colorization” by Richard Zhang, Phillip Isola, and Alexei A. Efros. The paper proposes a method for converting grayscale images to color using an autoencoder-based Neural Network.

-

The images below show some of our results; row 1: ground truth images, row 2: grayscale images serving as input, and row 3: model predictions. It seems to work quite well.

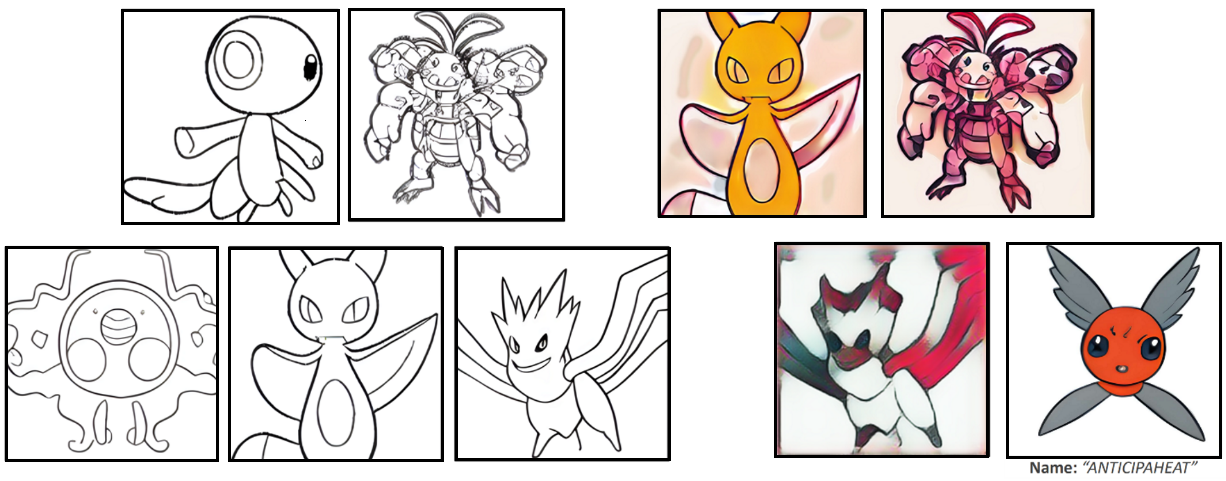

(3) Pokémon Generator based on Transfer Learning

- For a computational creativity assignment, I generated fake Pokémon images using the open-source text-to-image model ruDALLE. I fine-tuned the model on images of a specific Pokémon type and also used the pretrained weights to generate outlines of Pokémon sketches.

- The generation of the names was also automated; the creative system took a few input words, made some permutations, and selected the best permutation, evaluated using a linear classifier trained on Pokémon names. The linear classifier then ranked the generated names and selected the most plausible option.

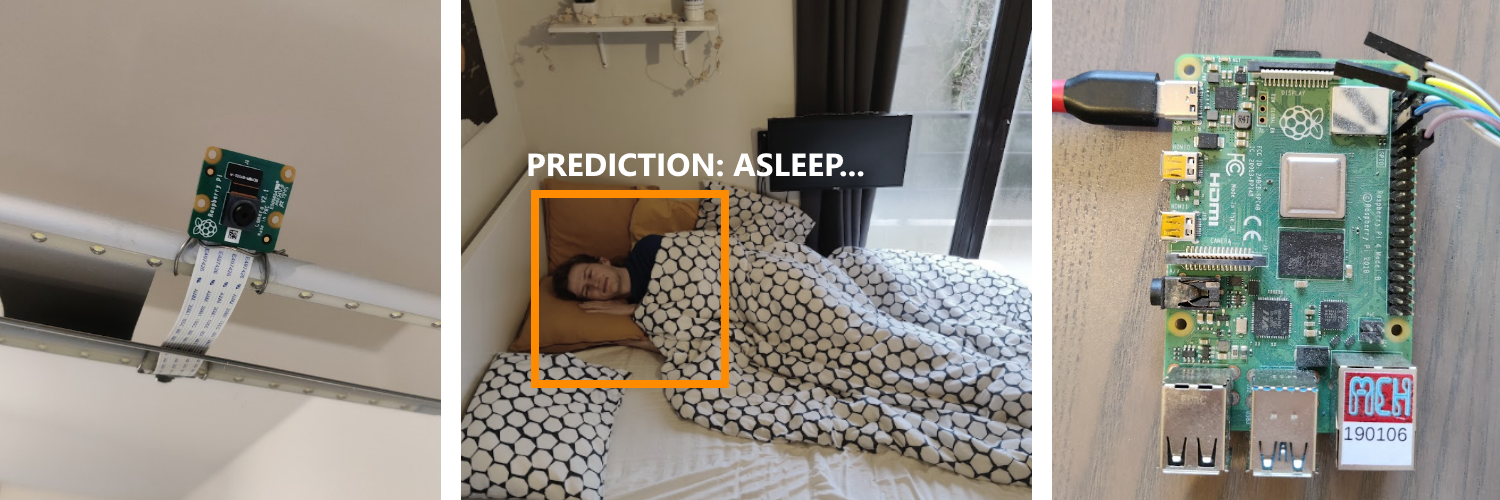

(4) Image recognition alarm

- Because waking up can be hard, I made a smart alarm to help me out. The alarm contains a camera that is pointed at my bed and detects when I sleep. When it is time to wake up, the alarm continues to play music while I stay in bed. Only when I walk out of bed, the alarm will stop.

- The alarm consists of a Raspberry Pi, a camera, and speakers. Classification is done using a Convolutional Neural Network developed in Python with Keras. The front end is developed in Angular.

(5) Two genetic algorithms for solving the Traveling Salesmen Problem

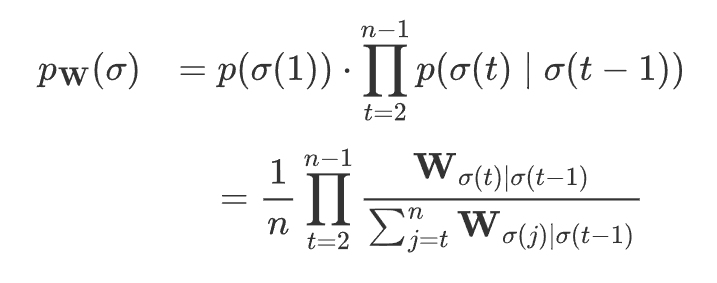

- The methods consist of a conventional selection-mutation-crossover approach and a more research-oriented approach based on gradient-descent-based for discrete domains. The second is done by extending the Plackett-Luce model with a new probability representation, defined as a first-order Markov chain, as shown below:

- While the equations may look fancy, it doesn’t really work that well in practice (that’s the downside of building upon less-established methods I guess 😅).

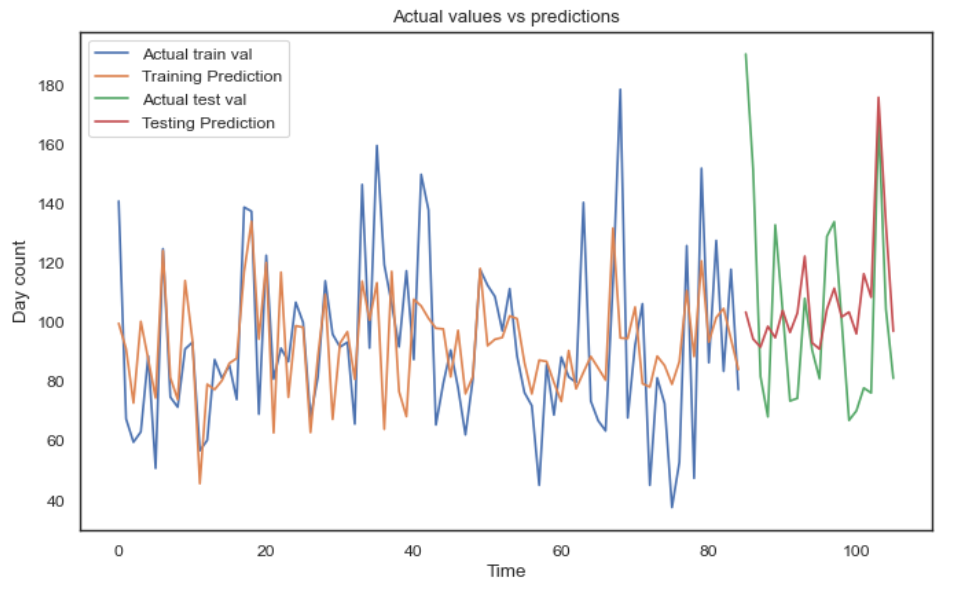

(6) Kaggle competition - Appliances regression

- Problem statement: To forecast the energy consumption of appliances in a house at a given time.

- My proposed solution consisted of different regression models, including linear regression, decision trees, boosting regression, and support vector regression. I also went through the full machine learning pipeline, including data visualization, data preprocessing, cross-validation for time series data, feature engineering, and model training with parameter tuning.

- I ranked within the top 10% of the leaderboard.

Publications

-

European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases, 2025.

European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases, 2025. -

IEEE Wireless Communications and Networking Conference, 2025.

IEEE Wireless Communications and Networking Conference, 2025. -

Adaptive and Learning Agents Workshop, 2023.

Adaptive and Learning Agents Workshop, 2023.

Powered by Jekyll and Minimal Light theme.